In the realm of real-time analytics, Apache Pinot stands out as a powerful real-time OLAP database designed for ultra-low latency and high throughput. As user-facing applications demand more complex queries, the need for efficient query execution becomes paramount.

This post delves into the evolution of Apache Pinot’s query execution capabilities, focusing on the transition from a single-stage engine to a multi-stage approach. We will explore the technical details, optimizations, and potential use cases that arise from this new architecture.

Background of Apache Pinot

Apache Pinot was born at LinkedIn, when they needed a real-time, distributed OLAP database designed to handle queries for their hundred million user base (now over 1 billion users). Since then it has been put into production at hundreds of enterprises, including Uber, Stripe, 7-11, Wix, and DoorDash.

The reason for its success comes from its architecture. Apache Pinot was designed to provide immediate insights at high concurrencies from large volumes of data measured in petabytes. Its design decisions, including an efficient columnar storage format and flexible, pluggable indexing techniques, allow it to ingest millions of events per second while maintaining high performance. While designed to handle real-time streaming data ingestion from services like Apache Kafka, Pinot’s architecture also supports batch ingestion, making it versatile for various use cases.

Single-Stage Query Engine: A Quick Overview

Initially, Apache Pinot utilized a single-stage query engine. This scatter-gather architecture allowed for efficient execution of simple analytical queries against denormalized data in a single table. For example, consider a classic use case where a LinkedIn user wants to know how many users viewed their profile in the past month. The query could be structured as follows:

SELECT * FROM user_views WHERE user_id = 'my_id' AND view_time > 'last_month'

Code language: JavaScript (javascript)In this model, the broker distributed the query across all relevant servers, which processed the data and returned results with minimal latency. However, this approach faced challenges when more complex queries, such as joins between multiple tables, were introduced.

The Need for More Complex Queries

As the demand for deeper insights grew, users sought to combine data from multiple tables. For instance, to find out how many users who worked at LinkedIn viewed a specific profile, a join between the user profile table and the user views table was necessary. This requirement pushed the boundaries of the single-stage engine, revealing its limitations.

Challenges of Single-Stage Joins

Single-stage joins in Apache Pinot faced numerous challenges, largely because of problems related to scalability. The initial approaches included:

- Pre-Join Tables: This method involves denormalizing data by combining tables before ingestion. While effective, it led to data duplication and complicated maintenance.

- Lookup Joins: In this approach, the user profile table was loaded onto every server to facilitate real-time lookups. However, this became unmanageable as the size of the user profile table increased.

- Multiple Single-Stage Queries: This required sending multiple queries sequentially, introducing network overhead and inefficiencies.

Each of these methods highlighted a need for a more efficient engine capable of handling complex queries without sacrificing performance.

Introducing the Multi-Stage Query Engine

The multi-stage query engine was developed to address the limitations of the single-stage approach. This architecture allows for the execution of complex queries by breaking them into manageable stages, each optimized for performance.

How Multi-Stage Execution Works

The multi-stage engine processes queries in a more sophisticated manner. Instead of executing a single scatter-gather operation, the engine can chain multiple stages together, allowing for greater flexibility and efficiency. Here’s how it works:

- The initial query is parsed and broken down into multiple stages, each assigned to different servers.

- Each stage can utilize the indexing capabilities of Apache Pinot, optimizing data retrieval and reducing unnecessary data shuffling.

- Results from one stage can serve as inputs for the next, minimizing the amount of data transferred across the network.

This model allows for more complex queries to be executed efficiently, leveraging the strengths of Apache Pinot’s indexing and storage capabilities.

Performance Optimizations in the Multi-Stage Engine

To ensure that the multi-stage engine performs on par with its single-stage predecessor, several optimizations were implemented:

Predicate Pushdown

One of the key optimizations is predicate pushdown, which allows filters to be applied at the earliest possible stage of query execution. This reduces the amount of data needing to be processed in subsequent stages, leading to significant performance gains.

Data Collocation

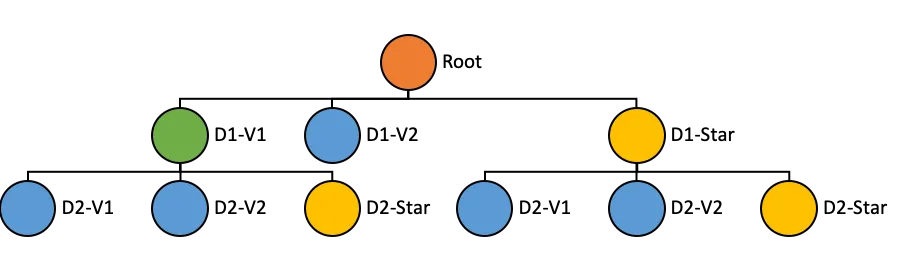

By ensuring that related data across multiple tables is stored on the same server, the engine can minimize the need for data shuffling during joins. This collocation is achieved through careful partitioning strategies defined by the user.

Utilizing Pre-Computed Results

Apache Pinot’s star-tree indexing allows for pre-computed aggregations. This means that for certain queries, the engine can quickly return results without needing to perform extensive calculations at runtime.

Use Cases for the Multi-Stage Query Engine

The introduction of the multi-stage query engine opens up numerous possibilities for real-time analytics applications. Here are a few potential use cases:

- User-Facing Analytics: Applications that require quick insights from user data can benefit significantly from the multi-stage engine’s low-latency performance.

- Complex Event Processing: Analyzing real-time events across multiple data sources can be achieved efficiently with the ability to perform joins on-the-fly.

- Business Intelligence: Organizations can run complex analytical queries that combine historical and real-time data to gain actionable insights.

- Observability: Apache Pinot can also be used for real-time data analysis of logs, metrics or traces. Joins can be used across different tables with time-series data.

Conclusion

Apache Pinot’s evolution from a single-stage to a multi-stage query engine marks a significant advancement in its capability to handle complex analytical queries. By leveraging optimizations such as predicate pushdown and data collocation, the multi-stage engine ensures that users can gain insights in real-time without compromising performance. As user demands continue to grow, Apache Pinot stands ready to meet the challenge, providing the tools necessary for modern analytics.

Feedback and Future Developments

The community’s feedback is crucial as we continue to enhance the multi-stage engine. Future releases will focus on stability, usability, and expanding the feature set to accommodate a broader range of use cases. Check out the “multi-stage” tag in the Apache Pinot Github repository for the latest advances and community requests.