A new interface for AI-native analytics workflows on Apache Pinot.

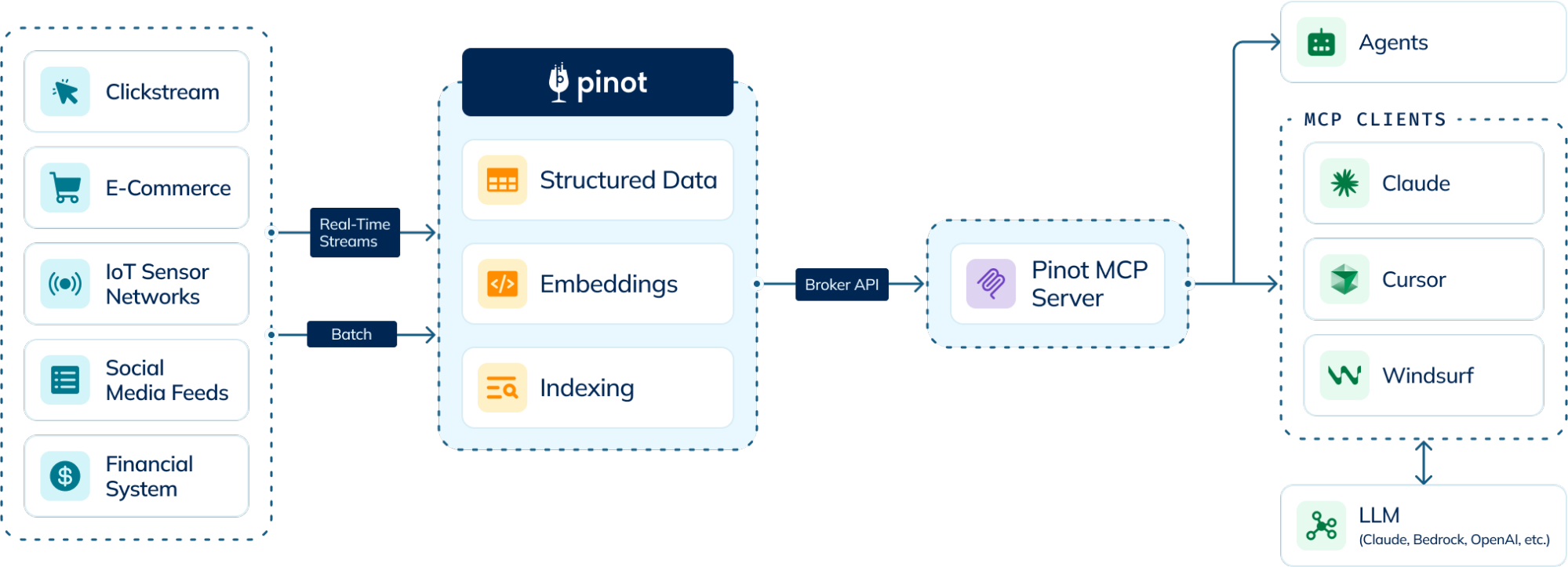

In the race to build truly advanced artificial intelligence (AI) systems, such as Retrieval Augmented Generation (RAG) architectures, a critical piece has been missing: the ability for AI agents to easily integrate with real-time, structured business data and unstructured data(vectors) at enterprise scale. This began to change with the introduction of Model Context Protocol (MCP), a standardized way for large language models (LLMs) to integrate with corporate sources-of-truth first pioneered by Anthropic. Today, we’re excited to announce the launch of StarTree MCP Server for Apache Pinot—a breakthrough that transforms how AI agents interact with your mission-critical data.

StarTree MCP Server: Your New Query Console (Reimagined)

StarTree MCP Server represents a profound transformation in how businesses engage with their data. Move beyond traditional query consoles and complex SQL. MCP Server is the database client reinvented for the AI age.

Leveraging MCP, a standardized interface for AI model interaction with external tools and data sources, we’ve engineered a next-generation query experience with powerful features:

Key Features & Capabilities

- Real-time Data Freshness: Access the most current data available with Pinot’s real-time ingestion capabilities, ensuring AI agents work with up-to-the-second information

- Millisecond Query Performance: Leverage Pinot’s industry-leading query speed for instantaneous responses, critical for maintaining AI agent context and fluid interactions

- Intelligent Schema Discovery: Automatically identify and navigate table relationships, columns, and data types without manual configuration

- Natural Language Interface: Transform plain English questions directly into precise database results without writing SQL, a process known as Natural Language to SQL (NL2SQL)

- Interactive Context Maintenance: reserve analytical context across multiple queries, enabling complex multi-step analysis without drifting

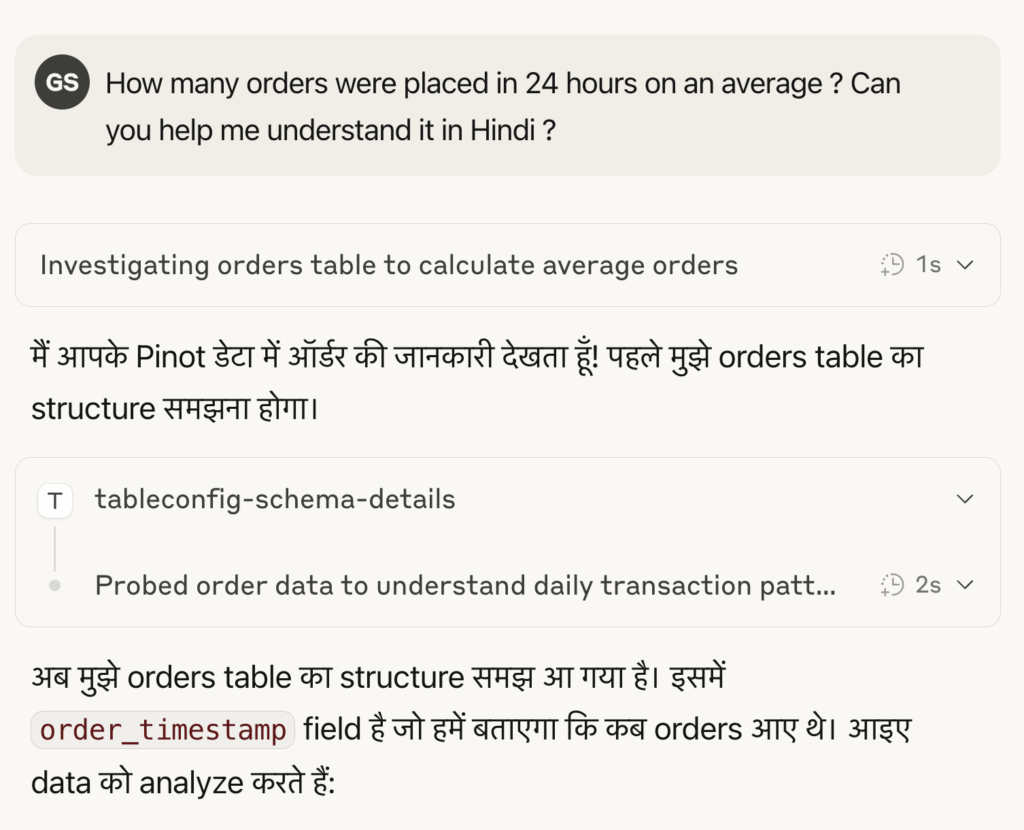

- Inference in Your Local Language: Interact with your data using your preferred language with automatic query translation (depends on the LLM you choose to use with MCP server)

- Advanced Metadata Integration: Access comprehensive table information, segment details, and system performance metrics through standardized API endpoints

- Performance Monitoring Tools: Identify query bottlenecks, resource constraints, and optimization opportunities with built-in diagnostics

- Custom Context (coming soon): Add domain-specific context in a configurable file, allowing your MCP client to be distributed with preloaded context that doesn’t require repeated typing

- Enterprise Guardrails & Controls (coming soon): Implement governance policies, access restrictions, and pattern enforcement for enhanced security

This versatile interface serves everyone—AI agents, data analysts, and business users who’ve never written SQL. While foundation models showed impressive language capabilities, their true business value emerges when combined with structured enterprise data, turning general intelligence into specific, actionable insights for your organization.

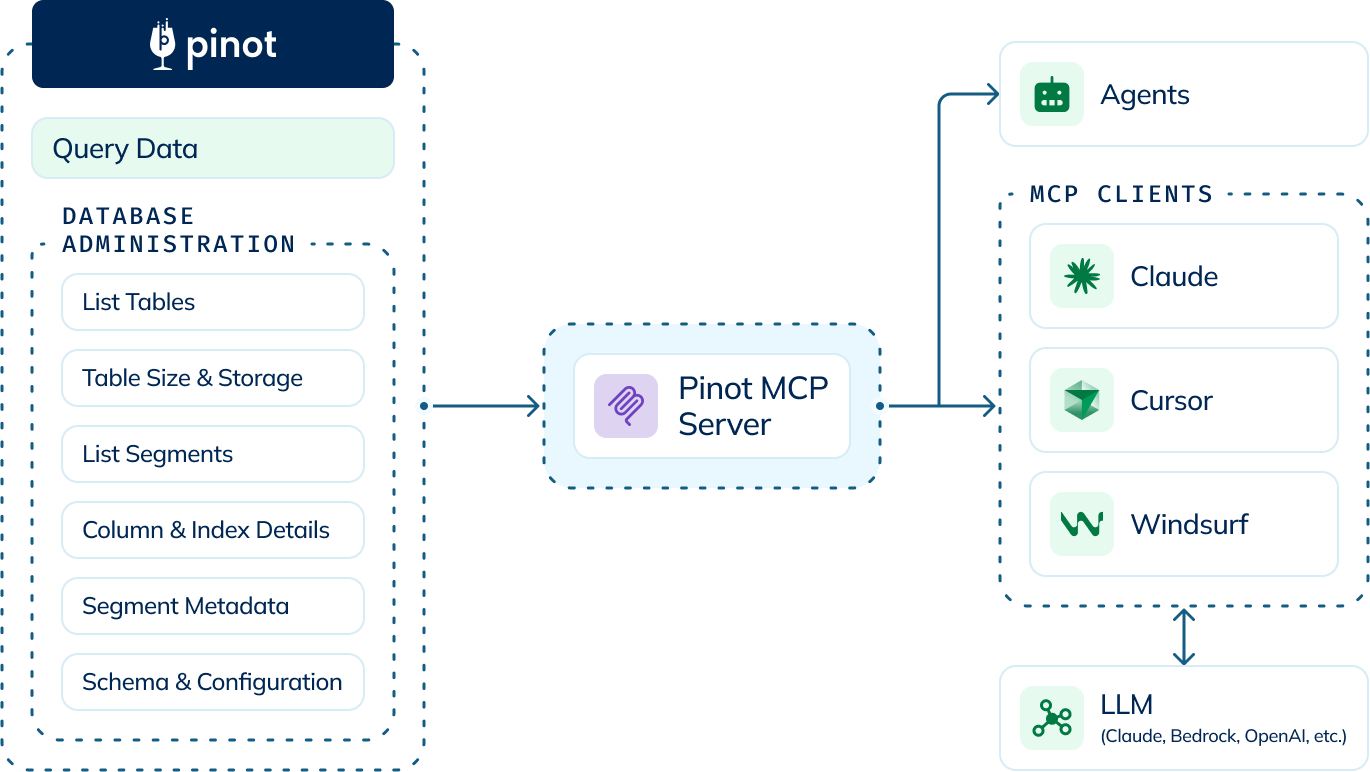

How it Works: MCP Technical Architecture

StarTree MCP Server is built as a lightweight, efficient bridge between AI systems and Apache Pinot’s powerful analytical capabilities. The architecture leverages the production-hardened Pinot Broker Query API that already powers analytics at companies like Uber, LinkedIn, and Stripe.

Architecture Overview

- LLMs (Claude, GPT-4, etc.): Provide the intelligence to interpret user requests

- MCP Clients (Claude Desktop, etc.): Act as the interface between users and MCP servers

- StarTree MCP Server: Translates requests into optimized Pinot queries for real-time data retrieval

- Apache Pinot/StarTree Cloud: Executes low-latency analytical queries across real-time and historical data through distributed columnar processing

- Broker Query API: Manages distributed query execution at scale

Deployment Options

Deploy MCP Server where it best suits your needs:

- Local Deployment: Alongside Claude Desktop for data analysts and business users

- Edge Deployment: Near AI agents to minimize latency

- Centralized Deployment: Within your infrastructure for shared access, integration with private models such as AWS Bedrock or alongside your internal backend services.

The MCP Server securely connects to your Pinot cluster, maintaining authentication and adding minimal overhead.

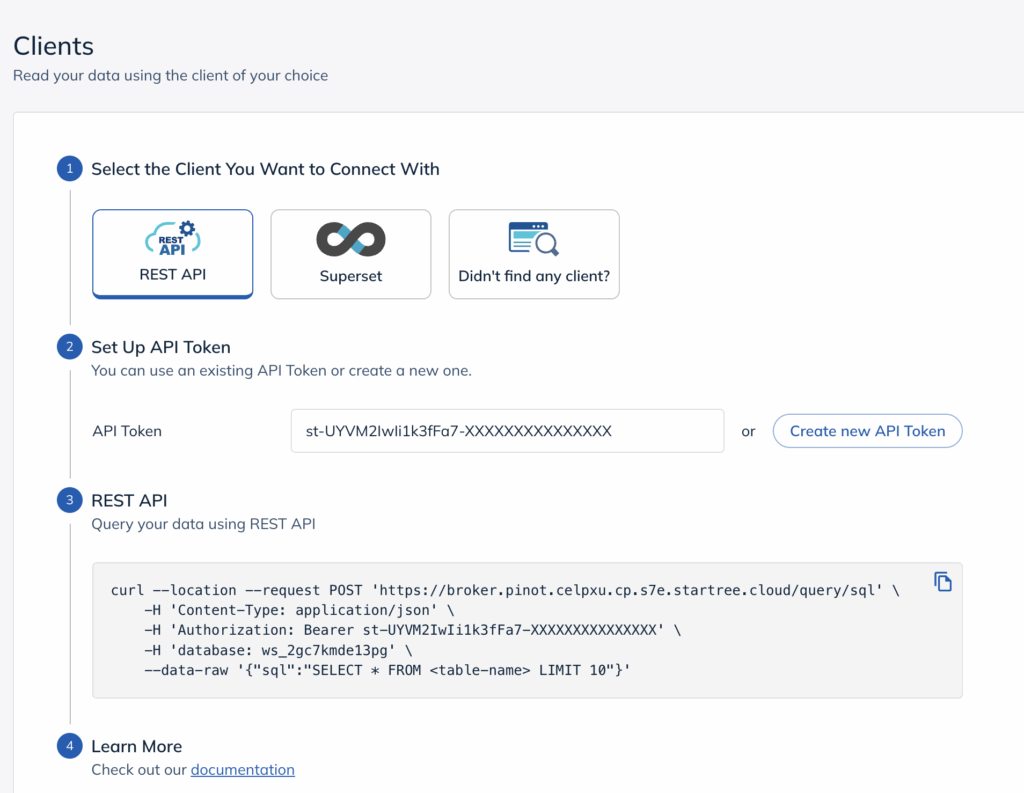

Getting Started with MCP Server

Getting started with StarTree MCP Server is straightforward: install, run the server, configure your client, and start querying your data in natural language. Here’s how to transform your Apache Pinot cluster into an AI-powered analytics engine.

Prerequisites

- Apache Pinot

- Open Source: Apache Pinot Quickstart

- Managed Service: StarTree Cloud (Easiest setup)

- Python 3.9+ installed on your system

- Claude Desktop or another MCP-compatible client

Four Simple Steps

Step 1: Install the MCP Server

Clone the MCP repository and set up your connection to your Pinot cluster:

git https://github.com/startreedata/mcp-pinot.git cd mcp-pinot

Next, configure your Pinot connection by copying .env.example to .env and editing it with your cluster details. For StarTree Cloud users, you’ll find your connection details in the client generation page within your dataset, where you can generate access tokens.

For detailed installation steps, refer to our GitHub repository.

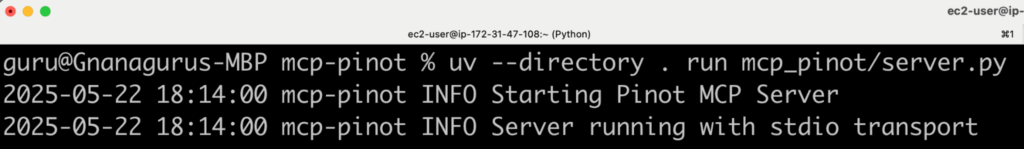

Step 2: Bring Up the Server

Launch the MCP server to establish the bridge between AI and your Pinot data:

uv --directory . run mcp_pinot/server.py

Step 3: Configure Your Client

Add the MCP server to your Claude Desktop configuration to point it to where your MCP server is running:

{

"mcpServers": {

"pinot_mcp_claude": {

"command": "/path/to/uv",

"args": [

"--directory",

"/path/to/mcp-pinot",

"run",

"mcp_pinot/server.py"

],

"env": {}

}

}

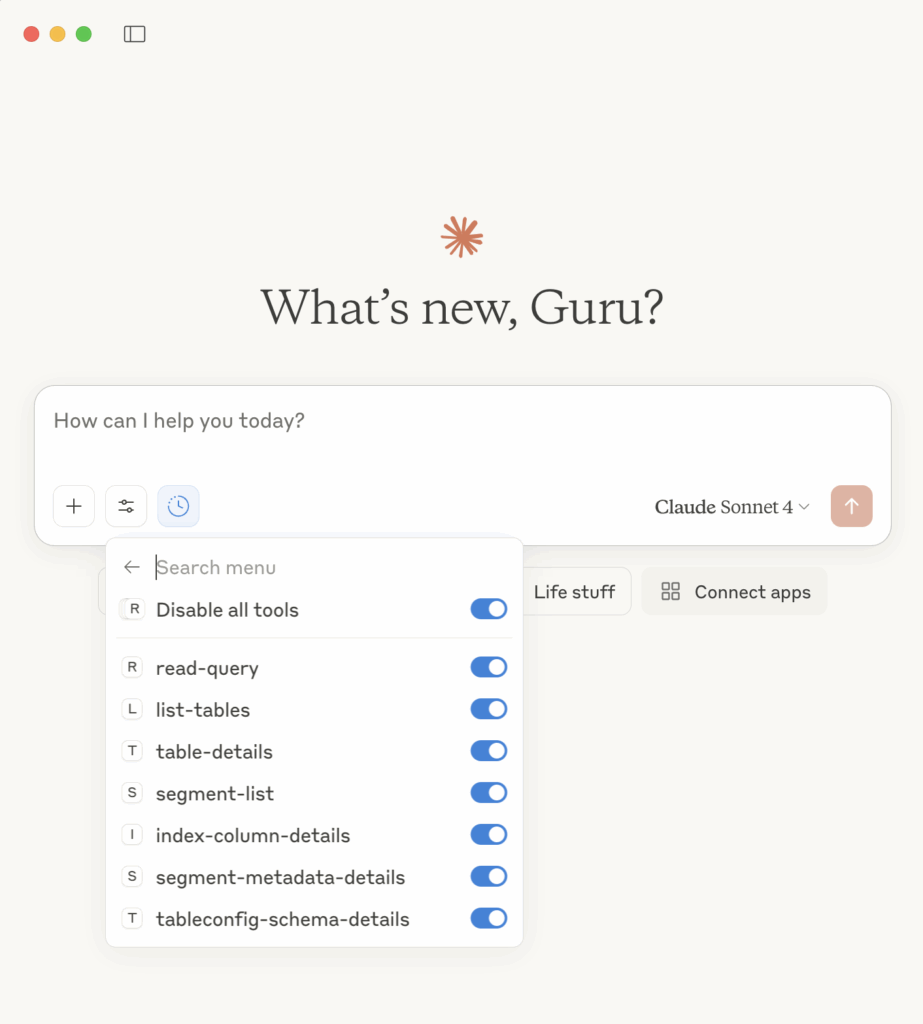

}Code language: JSON / JSON with Comments (json)Restart Claude Desktop and look for the Pinot tools in the tools menu (?️ icon). For detailed configuration steps, refer to our GitHub repository.

Step 4: Start Analyzing with Natural Language

You’re ready to explore your data! No SQL required—just ask questions in plain English. Here are some examples:

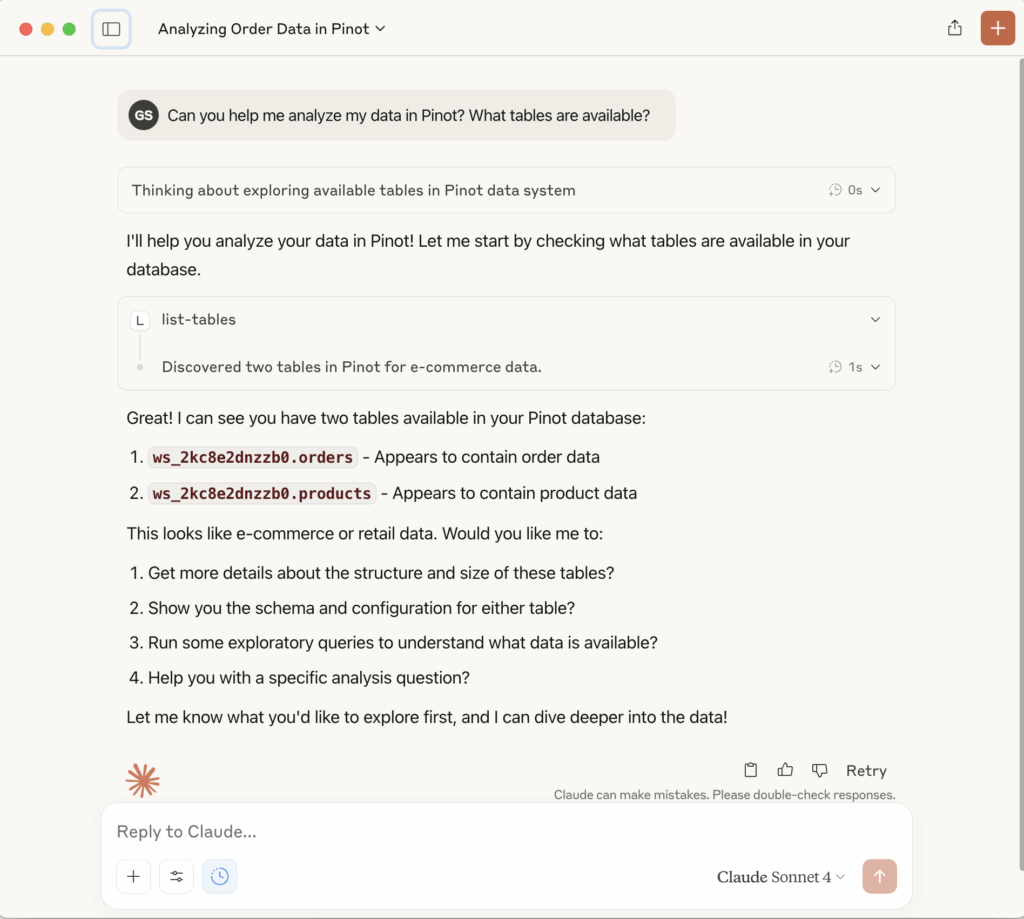

Example 1: Discover Your Data

Try this prompt:

Can you help me analyze my data in Pinot? What tables are available?

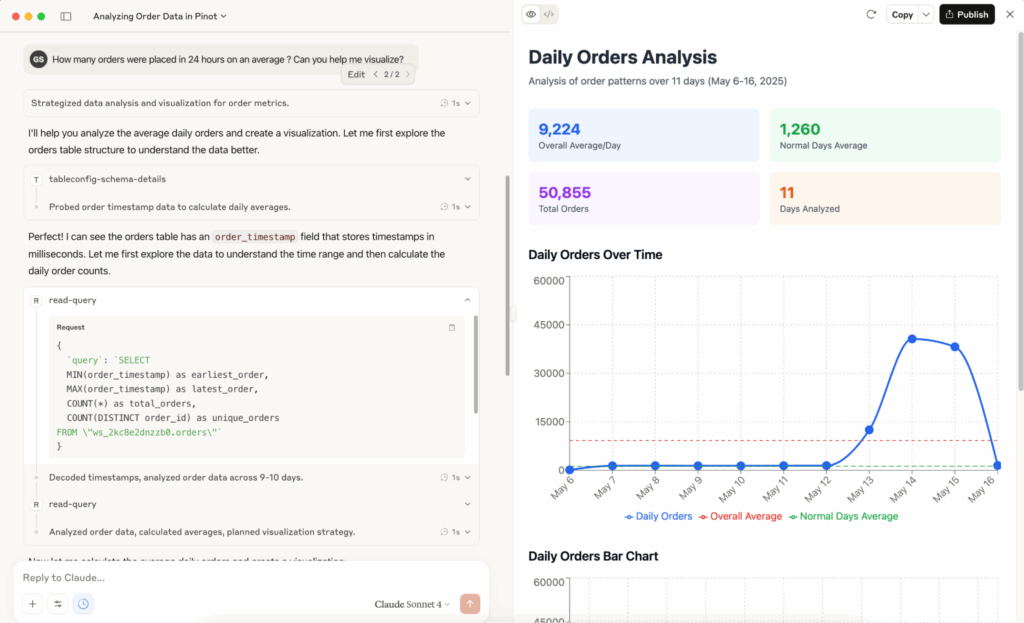

Example 2: Query & Visualize Your Data

Try this prompt:

How many orders were placed in 24 hours on an average ? Can you help me visualize?

Also you’re not limited to Claude Desktop! The MCP server works with other popular clients like LibreChat, Cursor, Windsurf, or you can build your own services and agents using the MCP protocol.

For Docker deployment options and advanced configurations, check out our GitHub repository.

Happy querying!

The Road Ahead: From MCP Server to Analytics SuperBrain

The StarTree MCP Server launch represents the first step in our broader AI strategy. As highlighted by StarTree’s CEO Kishore Gopalakrishna in his recent keynote, we’re building a comprehensive Analytics SuperBrain architecture that will transform how AI agents interact with enterprise data.

Key highlights of our roadmap include:

- Elevating Agent Intelligence: Continuously advancing MCP Server with richer contextual understanding through pre-defined analytical ‘recipes’ and robust enterprise guardrails to ensure secure, compliant, and efficient AI-scale data interactions

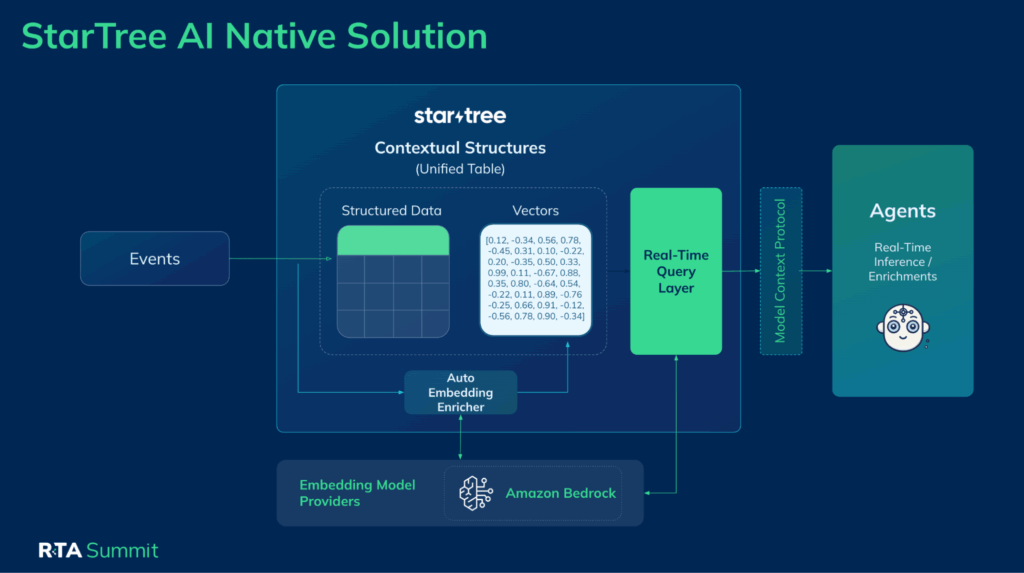

- Native AI Data Fluency: Pioneering groundbreaking native auto-embedding capabilities that will automatically transform your live structured business data into AI-ready vector representations in real-time, making your metrics instantly and intuitively understood by AI models

- Democratizing SuperBrain Access: Simplifying deployment and accelerating adoption by developing a seamlessly integrated, hosted MCP option within StarTree Cloud, making the power of the Analytics SuperBrain readily available

Conclusion: The Future of Analytics is Agent-Facing

Apache Pinot has already proven itself at the forefront of user-facing analytics, powering real-time experiences at companies like LinkedIn, Uber, and Stripe. Now, with StarTree MCP Server, we’re evolving to meet the demands of agent-facing analytics—where AI systems require thousands of contextual data points per conversation at machine speed.

This transition represents a fundamental shift in how organizations leverage their data. The most powerful AI applications won’t just be trained on static datasets, they’ll continuously interact with your business data to deliver contextual, real-time insights that adapt as your data changes.

We invite you to join us in building this future, starting today with StarTree MCP Server. Book a meeting to discuss your needs and learn more about how StarTree Cloud opens up new possibilities for real-time agent-facing analytics.