This is the first lesson in the Apache Pinot 101 Tutorial Series:

- Lesson 1: Up and Running with Docker

- Lesson 2: Ingesting Data with Kafka

- Lesson 3: Ingesting Batch Data

- Lesson 4: Indexes for Faster Queries

- Lesson 5: Queries with SQL, Joins

- Lesson 6: Visualization with Superset

In this series, you’ll learn how to get started with Apache Pinot using examples. You’ll learn how to build a real-time application that shows the changing price of Bitcoin and the current up-to-date value of a Bitcoin portfolio. The series will cover core concepts, Docker setup, streaming ingestion, indexing, and querying.

At the end of the six tutorials in this series, you’ll have built a real-time Bitcoin price dashboard using:

- Apache Kafka to ingest a live stream of Bitcoin prices.

- Apache Pinot for low-latency queries on streaming and batch data.

- Apache Superset for visualising the prices of Bitcoin.

In this first lesson, you’ll discover just how easy it is to get up and running with Pinot using Docker.

To follow along with this post, clone the GitHub repository:

https://github.com/startreedata/learn.gitCode language: JavaScript (javascript)You’ll find the code needed to follow along with this tutorial in the pinot-btc directory.

A Pinot cluster consists of the following processes, which are typically deployed on separate hardware resources in production. In development, they can fit comfortably into Docker containers on a typical laptop.

- Controller: Responsible for managing the various components of the cluster such as the brokers and the servers. It also provides a REST API for viewing, creating, updating, and deleting configuration which are used to manage the cluster.

- Zookeeper: Stores information about the cluster such as the list of servers and brokers, the list of tables and their schemas, and the controller which is the current leader in the cluster.

- Broker: Responsible for accepting queries from the user and scattering them to the applicable servers, gathering the results, and returning the results to the user.

- Server: Responsible for storing the data and performing the computation required to execute queries. In a production environment, there will be many servers allowing more data to be hosted. In our setup, however, there is only one server.

In production environments, these components typically run on separate server instances, and scale out as needed for data volume, load, availability, and latency. Pinot clusters in production range from fewer than ten total instances to more than 1,000.

With Docker, we can spin up each component as a container. Rather than doing it individually, let’s use a Docker Compose project to bundle everything together. You can inspect the docker compose file at docker-compose.yml

Begin by bringing up all of the Docker containers by running the following command:

docker compose up -dThis brings up the various components of Pinot in containers of their own.

Check their status by running:

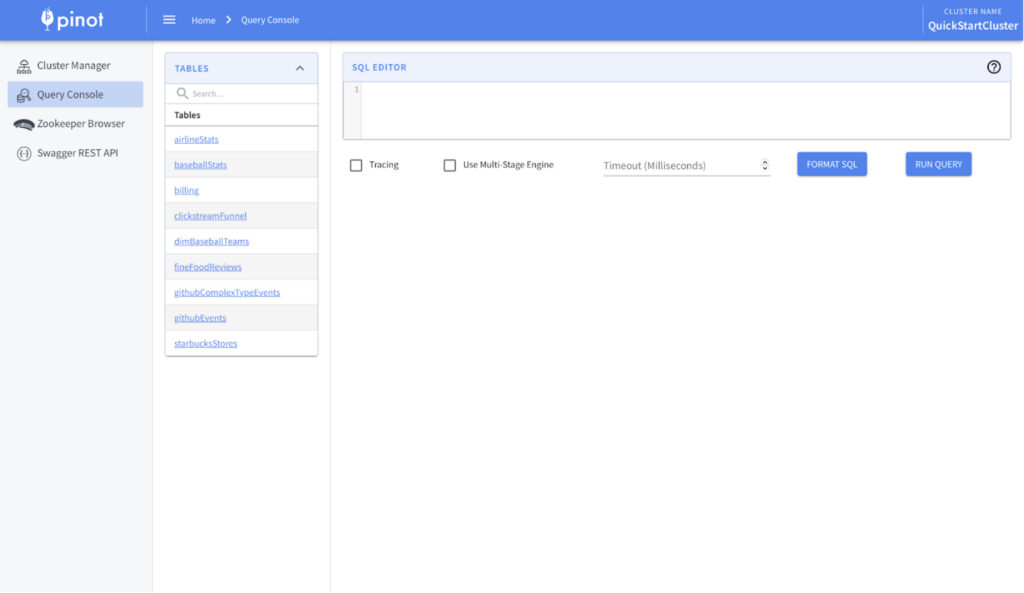

docker-compose psWith the containers up and running you can now access the Pinot query console. Navigate to http://localhost:9000. Once there, click on “Query Console” on the left.

There is no data in your Pinot database yet. So in the next lesson you’ll learn how to stream in a feed of live Bitcoin prices.

Next Lesson: Ingesting Streaming Data from Kafka →