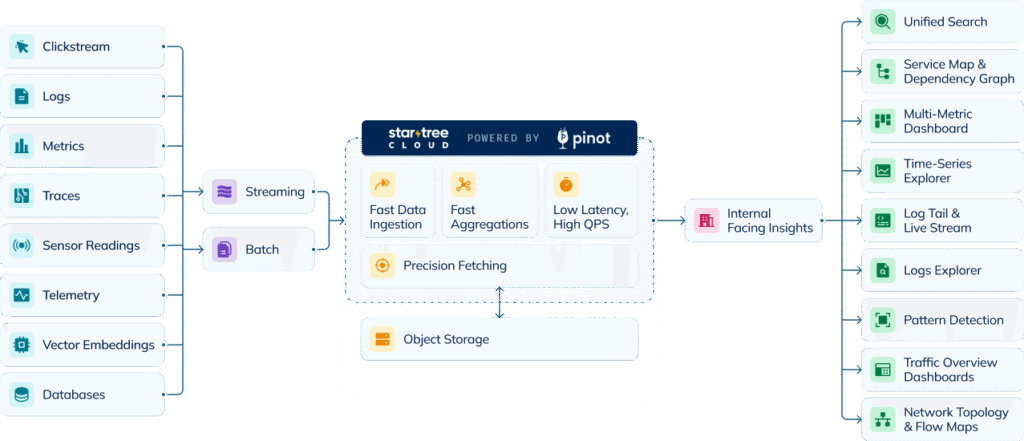

Streaming Stats,Stickier Sites, Simpler Stacks

Sub-second latency. 10K, 20K, even 80K+ concurrent queries. Finally, a system that keeps up.

When it’s customer-facing, every millisecond matters

Internal dashboards can afford delays. Customer-facing apps cannot. External users expect answers instantly. Slow metrics mean one thing in customer-facing apps – customers don’t stick around.

But scale makes this hard. Supporting tens of thousands of concurrent queries while still delivering sub-second latency is traditionally impossible without brittle workarounds.

The old pattern? Pre-aggregate data, move it into a separate serving layer, and brace yourself for endless pipeline rewrites every time requirements change. The result: complexity, cost, and fragility.

StarTree changes the equation…

Real-time analytics no longer means expensive

For years, “real-time” meant bending a traditional data warehouse or lake into something it was never designed to do — with ballooning compute costs and endless serving-layer complexity.

StarTree flips this equation. When real-time is the core design, compute costs fall dramatically: queries resolve faster, indexes reduce overhead, and concurrency scales without runaway spend.

Storage, too, no longer carries a tradeoff. With Iceberg and S3 integration, data lives cheaply where it belongs — yet insights are still delivered with sub-second SLAs.

The result: faster apps, simpler architectures, and significantly lower TCO.

Performance + simplicity + efficiency for customer-facing insights

Extreme Concurrency

Sub-Second Latency

Fewer Hops, Less Movement

Cost-Efficient by Design

Purpose-built for customer-facing apps