In this talk at RTA Summit 2025, Yusuf Kulah shares how they do it!

CrowdStrike’s backend runs on dozens of microservices, many of which consume a shared firehose of events via the Kafka distributed event streaming platform, said Yusuf Külah, a CrowdStrike senior engineer, in a technical session at the Real-Time Analytics Summit hosted by StarTree. Kafka acts as a central messaging system, broadcasting a stream of data like system metrics from endpoint agents.

Managing variable loads

The problem facing Crowdstrike was when Kafka topics experienced highly variable loads, often spiking when new customers came on board or when malware caused a surge in system events. Microservices like front-end request handlers must respond quickly in such situations, while others take longer to digest data.

To keep service-level objectives (SLOs) intact, the team built a Pinot-powered monitoring layer that tracks event volume in real-time and publishes signals to a data bus when thresholds are crossed. This “traffic cop” approach helps services implement throttling or other mitigation plans dynamically.

Supporting security analytics

Using the same Kafka event stream, CrowdStrike applied Pinot to a second challenge: detecting data exfiltration. Crowdstrike aggregates and deduplicates events like file transfers or outbound network activity in real-time to ensure analysts get a clean feed of what’s leaving the system and where it’s going.

Security analysts can monitor a dashboard to slice and dice data by destination, source, or other dimensions. This enables them to quickly pinpoint, for example, a rogue internal user shipping data to an unapproved endpoint.

Wrestling with Protobuf complexity

CrowdStrike’s events are encoded in Protobuf, a compact data serialization format that lets them define structured data using schemas, including nested types and enumerations. While Protobuf is great for performance and consistency, it’s less effective when schemas are evolving, deeply nested, and not uniform across environments.

A bigger problem was that Protobuf changes forced restarts of Pinot servers, since Pinot had to reload descriptors with each change. Parsing complex Protobufs was slow, especially when only part of the message was relevant.

Crowdstrike’s solution was to introduce a preprocessing layer. This component takes complex Protobuf events, extracts what’s needed, and emits a “lean” JSON version aligned with Pinot’s schema. It offloads heavy parsing, improves performance, skips unneeded fields, and avoids Pinot restarts. The tradeoff is that Kafka carries a duplicate lean event stream, which adds storage cost. Crowdstrike determined that benefits outweighed the bloat, however.

Kafka’s dynamic addressing challenge

In a dynamic infrastructure, Kafka message broker addresses change frequently, challenging Pinot to keep up. Failure to refresh broker lists in real-time risks ingestion delays or data loss, which are unacceptable in security analytics.

DNS-based discovery was an obvious solution but not an option due to internal security policies, so CrowdStrike built an automated broker updater. This keeps Pinot’s configuration in sync with Kafka’s current state, ensuring smooth ingestion even as the cluster evolves. As a bonus, the updater mechanism also helped prevent configuration drift. If a Pinot table’s settings deviated from the official configuration, the updater would automatically realign it.

Turning point-in-time queries into feeds

Pinot is fast, but single queries are just snapshots. CrowdStrike needed a continuous data feed for some services, especially those that make decisions based on trends, such as a spike in data egress volumes over a defined period of time.

Running hundreds or thousands of point-in-time queries from microservice replicas would have been wasteful and redundant, so the CrowdStrike team built a query scheduler to convert snapshots into continuous feeds. The scheduler deduplicates queries, reduces processing load, and enables real-time context.

The big picture

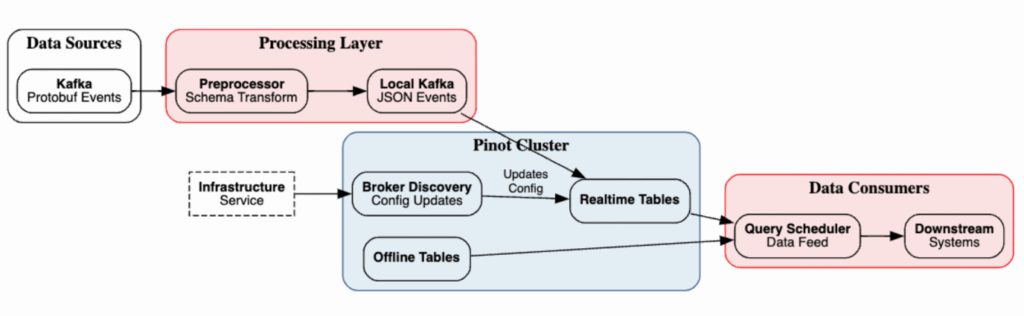

The system comprises four layers as illustrated:

- Data sources are primarily Kafka, but also include some batch feeds.

- The Preprocessor parses Protobufs and emits lean JSON events.

- The Apache Pinot cluster is the core analytics engine. It ingests lean events into real-time tables and optionally into batch-aggregated offline tables for long-term queries.

- Data consumers are the downstream services and dashboards. Some query Pinot directly, while others rely on the continuous feed via the query scheduler.

Pinot is deployed in a multi-tenant setup, with some servers dedicated per tenant depending on the workload. This design balances performance and isolation.

The results

CrowdStrike’s Pinot deployment has been able to handle whatever real-time data is thrown at it. A single table replica handles up to 120,000 events per second. One table has seen over 5 billion ingested events. The busiest table supports up to 25,000 queries per second. All of this runs across five global production environments and more than 10 Pinot clusters.

CrowdStrike’s real-time analytics system isn’t just fast; it’s resilient, scalable, and purpose-built for high-stakes cybersecurity work. From preprocessing complex data to automating infrastructure adaptation, the team’s approach demonstrates how thoughtful engineering can turn raw event firehoses into actionable insights.

Learn more

StarTree provides Apache Pinot as a managed service with many extended capabilities. Book a meeting with one of our experts to learn more about how you can scale with variable loads with Apache Pinot.